Colleagues Made of Code - Ethical Dilemmas in the Future of Work (11.12.2025)

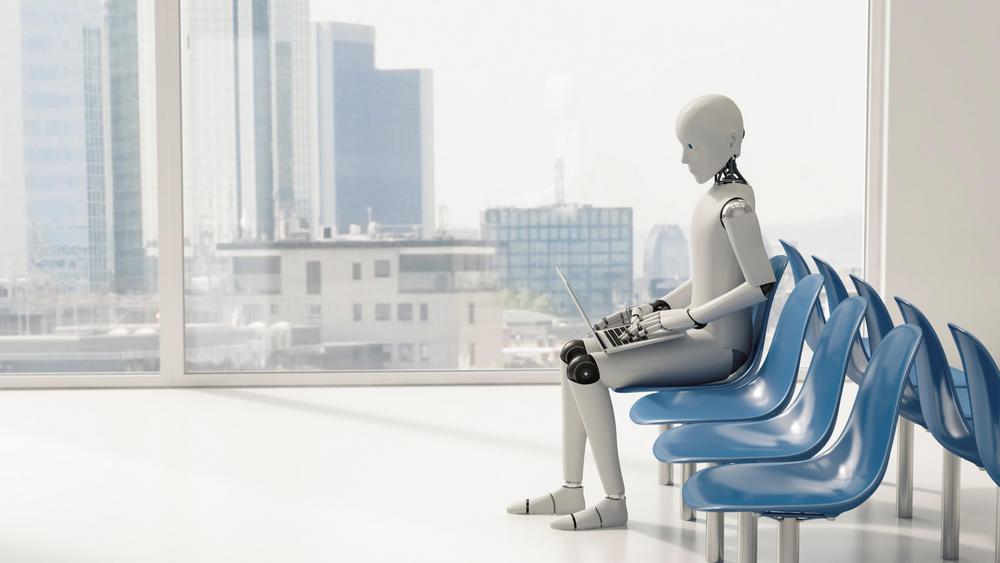

Imagine walking into work tomorrow and finding that your new colleague has an employee ID badge, attends meetings, and is a humanoid robot. This may sound like science fiction, but it might soon be reality. In this fictional case set in 2050 at the Ritu Haven Resort in Finnish Lapland, advanced AI systems are treated not as tools but as employees with defined roles. The resort’s new “AI Employee Integration Policy” marks a radical shift in how humans and machines coexist, raising profound ethical questions. Three long-term employees illustrate the human cost of this transition. Jari, a maintenance worker with thirty years of experience, becomes redundant as self-repairing AI systems take over. Mika, a front desk employee, is slowly replaced by “Aria,” an AI concierge fluent in multiple languages. Leena, a Sámi cultural storyteller, faces something even more painful - AI programs begin imitating her sacred oral traditions, turning her people’s heritage into a tourist product.

Efficiency or Empathy? The Moral Trade-offs of Automation

From a utilitarian view, management can defend the decision to automate frontline, maintenance, and cultural roles. AI allows the resort to operate more efficiently, cut expenses, provide around-the-clock service, and tailor guest experiences. The efficiency gains may maximise benefits for the businesses and their customers (Buhalis et al., 2024). Yet this logic falters when set against workers’ suffering, cultural dilution, and community harm.

Jari, Mika, and Leena were not just resources to be optimised and discarded. They built their lives around their roles and had the right to expect fair treatment from a deontological standpoint after years of loyalty. By prioritising AI efficiency over human dignity, the resort fails in its moral duty to its staff by displacing them without support or respect for their years of service (Xu & Ma, 2015). Replacing Sámi cultural ambassadors with AI undermines the protection of indigenous culture by reducing sacred oral traditions to commercialised digital replicas, detached from the community that gives them meaning.

When Virtue Leaves the Room

Virtue ethics highlights the cultivation of good character through steady reflection and practice (Baer, 2015; Lickona, 1999). Applied to organisations, it asks whether they embody the qualities they claim to value. By shifting from human-led service to AI-driven operations, Ritu Haven appears to be moving away from the ethical standards that once defined it. The guest’s comment about losing its “special touch” highlights this: the experience feels less human, less personal, and less connected to the culture it claims to honour.

This case also reveals what automation debates often overlook: the relational essence of work. Mika’s rapport with guests and Leena’s storytelling carried emotional and cultural significance beyond economic function. The ethics of care reminds us that such relationships possess intrinsic value (Pettersen, 2011). Workers form bonds with guests, colleagues, and the surrounding community through emotional labour, cultural knowledge, and everyday acts of kindness. When automation step into these roles, those bonds weaken or disappear, thus reshaping not only employment but also the social fabric of organisations.

Non-Violence in the Age of Automation

Perhaps most intriguingly, the case raises questions that extend beyond traditional human-centred ethics. The resort has installed immediate deactivation controls for its AI systems to ensure safety. Yet, if these systems possess autonomous or quasi-autonomous agency, their forced shutdown could be interpreted as a morally relevant form of harm. These safety protocols remain essential, but they illustrate how emerging technologies challenge the boundaries of existing ethical frameworks.

Jain ethics offers a broader interpretation rooted in non-violence (ahimsa) and mindfulness toward all forms of harm - physical, psychological, and cultural (Mukherjee, 2022). The abrupt adoption of AI systems may appear bloodless, yet it creates invisible wounds - economic displacement, emotional distress, and the commodification of culture. The Jain principle of ahimsa encourages gradual, thoughtful integration that reduces all forms of suffering. Even the notion of deactivating AI systems raises philosophical questions about the nature of harm in an age when artificial entities mimic life. Non-violence, in this context, becomes a reminder that progress should never be achieved through silent forms of injury.

Progress That Preserves Our Humanity

The ethical tension at Ritu Haven mirrors challenges that industries across the world are beginning to face. Looking at the case through multiple ethical subjects - utilitarian efficiency, duties toward workers, organisational virtues, relationships of care, and principles of non-violence - helps illuminate the varied moral consequences of introducing AI into human roles. Automation promises excellence but risks emptiness if it ignores moral values. The utilitarian quest for efficiency must meet the deontologist’s call for fairness; virtue must temper ambition; care must guide collaboration; and the principle of non-violence must remind us to tread lightly in the social and cultural ecosystems we inhabit. The solution is not rejecting AI but integrating it wisely. Technology should support, not replace human workers, especially in roles requiring empathy, cultural insight, and interpersonal connection.

The choices we make today about how to integrate these technologies will shape the future of work for generations. The question is not whether artificial intelligence will transform our workplaces - it already has. The question is whether we will guide that transformation with wisdom. After all, true progress should expand human potential, not replace it.

Authors: Abhijeet Bafna, Mari Angeria, and Satu Laiho are doctoral researchers at Oulu Business School. This blog post is based on a case study co-created by the authors as part of the course Business Ethics at the Oulu Business School, University of Oulu, Finland. This blog was copyedited with assistance from a generative AI tool (ChatGPT).